Here’s part 11 of the ongoing serialization of Refactoring HTML, also available from Amazon and Safari.

This part’s a little funny because really it deserves an entire book on its own, and that book has yet to be written. I didn’t have space or time to write a complete second book about test driven development of web sites and web applications, but perhaps this small piece will inspire someone else to do it. If not, maybe I’ll get to it one of these days. 🙂

In theory, refactoring should not break anything that isn’t already broken. In practice, it isn’t always so reliable. To some extent, the catalog later in this book shows you what changes you can safely make. However, both people and tools do make mistakes; and it’s always possible that refactoring will introduce new bugs. Thus, the refactoring process really needs a good automated test suite. After every refactoring, you’d like to be able to press a button and see at a glance whether anything broke.

Although test-driven development has been a massive success among traditional programmers, it is not yet so common among web developers, especially those working on the front end. In fact, any automated testing of web sites is probably the exception rather than the rule, especially when it comes to HTML. It is time for that to change. It is time for web developers to start to write and run test suites and to use test-driven development.

The basic test-driven development approach is as follows:

- Write a test for a feature.

- Code the simplest thing that can possibly work.

- Run all tests.

- If tests passed, goto 1.

- Else, goto 2.

For refactoring purposes, it is very important that this process be as automatic as possible. In particular:

- The test suite should not require any complicated setup. Ideally, you should be able to run it with the click of a button. You don’t want developers to skip running tests because they’re too hard to run.

- The tests should be fast enough that they can be run frequently; ideally, they should take 90 seconds or less to run. You don’t want developers to skip running tests because they take too long.

- The result must be pass or fail, and it should be blindingly obvious which it is. If the result is fail, the failed tests should generate more output explaining what failed. However, passing tests should generate no output at all, except perhaps for a message such as “All tests passed”. In particular, you want to avoid the common problem in which one or two failing tests get lost in a sea of output from passing tests.

Writing tests for web applications is harder than writing tests for classic applications. Part of this is because the tools for web application testing aren’t as mature as the tools for traditional application testing. Part of this is because any test that involves looking at something and figuring out whether it looks right is hard for a computer. (It’s easy for a person, but the goal is to remove people from the loop.) Thus, you may not achieve the perfect coverage you can in a Java or .NET application. Nonetheless, some testing is better than none, and you can in fact test quite a lot.

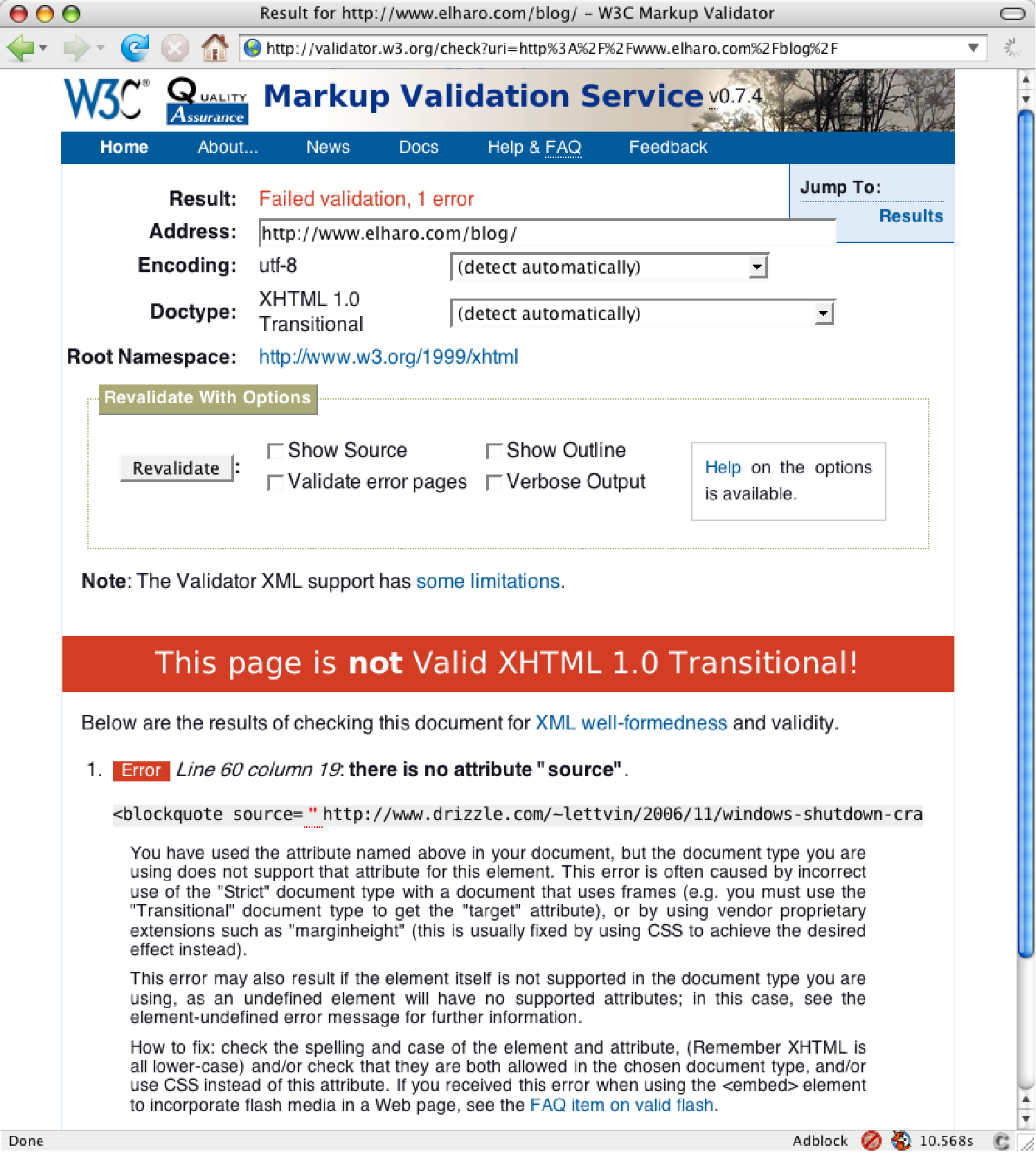

One thing you will discover is that refactoring your code to web standards such as XHTML is going to make testing a lot easier. Going forward, it is much easier to write tests for well-formed and valid XHTML pages than for malformed ones. This is because it is much easier to write code that consumes well-formed pages than malformed ones. It is much easier to see what the browser sees, because all browsers see the same thing in well-formed pages and different things in malformed ones. Thus, one benefit of refactoring is improving testability and making test-driven development possible in the first place. Indeed, with a lot of web sites that don’t already have tests, you may need to refactor them enough to make testing possible before moving forward.

You can use many tools to test web pages, ranging from decent to horrible and free to very expensive. Some of these are designed for programmers, some for web developers, and some for business domain experts. They include:

- HtmlUnit

- JsUnit

- HttpUnit

- JWebUnit

- FitNesse

- Selenium

In practice, the rough edges on these tools make it very helpful to have an experienced agile programmer develop the first few tests and the test framework. Once you have an automated test suite in place, it is usually easier to add more tests yourself.

(more…)