April 20th, 2010

Every time the subject of checked versus runtime exceptions comes up, someone cites Bruce Eckel as an argument by authority. This is unfortunate, because, as much as I like and respect Bruce, he is out to sea on this one. Nor is it merely a matter of opinion. In this case, Bruce is factually incorrect. He believes things about checked exceptions that just aren’t true; and I think it’s time to lay his misconceptions to rest once and for all.

Let’s see exactly what Bruce’s mistake is. The following is an extended selection from Thinking in Java, 4th edition, pp. 490-491:

An exception-handling system is a trapdoor that allows your program to abandon execution of the normal sequence of statements. The trapdoors used when an “exceptional condition” occurs, such that normal execution is no longer possible or desirable. Exceptions represent conditions that the current method is unable to handle. The reason exception-handling systems were developed is because the approach of dealing with each possible error condition produced by each function call was too onerous, and programmers simply weren’t doing it. As a result, they were ignoring the errors. It’s worth observing that the issue of programmer convenience in handling errors was a prime motivation for exceptions in the first place.

One of the important guidelines in exception handling is “Don’t catch an exception unless you know what to do with it.” In fact, one of the important goals of exception handling is to move the error-handling code away from the point where the errors occur. This allows you to focus on what you want to accomplish in one section of your code, and how you’re going to deal with problems in a distinct separate section of your code. As a result, your mainline code is not cluttered with error-handling logic, and it’s much easier to understand and maintain. Exception handling also tends to reduce the amount of error-handling code, by allowing one handler to deal with many error sites.

Checked exceptions complicate the scenario a bit, because they force you to add catch clauses in places where you may not be ready to handle an error. This results in the “harmful if swallowed” problem:

try {

// ... to do something useful

} catch (ObligatoryException e) {} // Gulp!

Do you see the mistake? It’s a common one. Read the rest of this entry »

Posted in Programming | 64 Comments »

December 31st, 2009

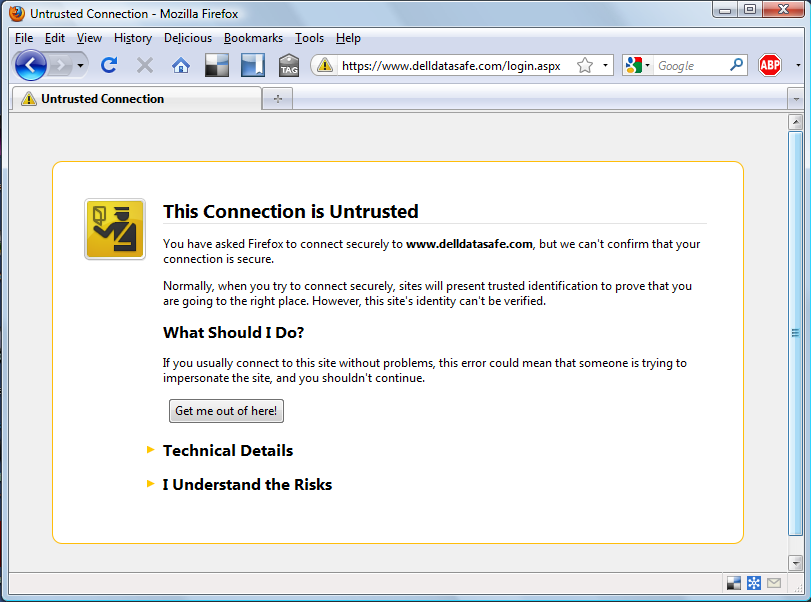

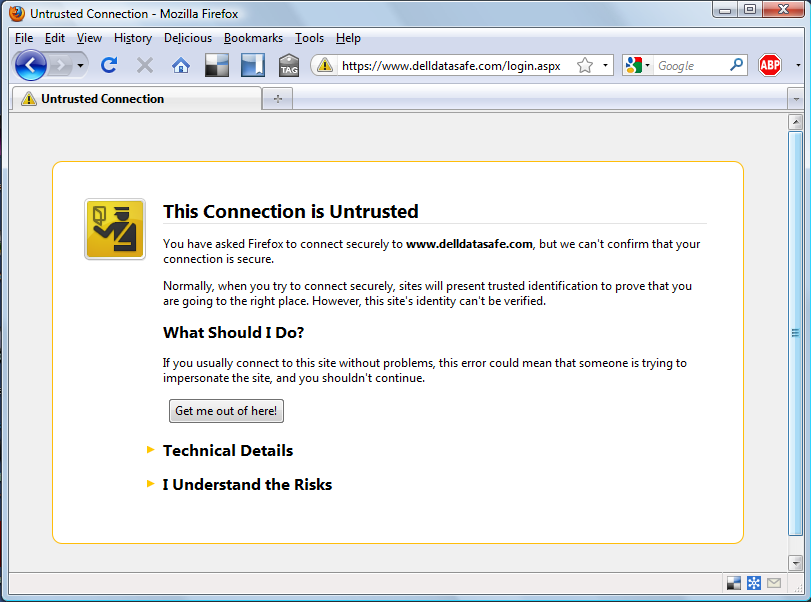

If Dell can’t even manage their public key certificates, how can I trust them to keep my data safe and secure?

Read the rest of this entry »

Posted in Security, Windows | 10 Comments »

December 21st, 2009

Lately I’ve been thinking a lot about continuous deployment for reasons I’m not quite yet at liberty to disclose. This has inspired me to improve the XOM release process, to make it more of a one click process, or, to be more accurate, a one ant target process. I can now release a new version simply by typing:

$ ant -Dpassword = secret -Dwebpassword=other_secret release

This not only builds the entire project. It tags the release in CVS, uploads the zip and tar.gz files to IBiblio, and uploads the documentation to my web host. It doesn’t yet file a bug to upload the maven files, but I’m working on that.

During the process of setting this up, I realized that my organization is a little backwards. In particular, I’m pushing all the artifacts from my local system. Instead, I should merely be committing everything to the source code control repository; tagging a release; and then having the further downstream artifacts like the zip and tar.gz files and documentation pulled from source code control onto the Web servers.

There are some commercial products that are organized like this, including ThoughtWorks’s Cruise, but none of the major open source hosting sites such as SourceForge and java.net work like this. Certainly, SourceForge and similar sites have been major contributors to the open source revolution. They have enabled hobbyist developers working in their garages to use tools and techniques of software development that were previously limited to corporations. They have it enabled far-flung developers around the world to collaborate with each other far more effectively than they could do by e-mailing each other tar files. They have removed the burden of system administration from many programmers, thus enabling them to devote more time to writing code. Make no mistake. SourceForge et al. are real force for good in the community.

That said, the state of the art in software development has moved forward significantly since these sites were founded. CVS has mostly been replaced by Subversion. On some projects, Subversion has been been replaced by distributed version control systems such as git and Mercurial. Unit testing and test driven development have moved from extreme practices to standard operating procedure. Continuous integration using products like Hudson and Cruise Control is routine. Nonetheless, most project hosting sites still offer little beyond a source code repository, a bug tracker, and some webspace. Not that that’s not important, but we can do so much more.

It’s time to think about what a modern project hosting site might want to offer and what it might look like.

Read the rest of this entry »

Posted in Programming | 5 Comments »

September 27th, 2009

I have two 23″ monitors on my desktop at work, and have worked that way for about three years now (aside from a brief flirtation with a single 30″ monitor in California). On Windows and Linux this is an incredibly productive setup. I can have a full screen IDE open on one and a full-screen web browser open in the other. The web browser gives me a huge reference library and easy access to a lot of apps including e-mail, calendar, and more, and the IDE lets me do my work. I can easily switch back and forth between them to surf or edit. It’s a smooth and fluid workflow. Even a single monitor twice the size doesn’t work as well since you can’t easily organize the two applications on the screen.

I’m a programmer but the same is true for anyone who works primarily in one large application. For instance, for designers it might be Photoshop or QuarkXPress. For writers it may be Microsoft Word. For business folks it could be Excel. We all need a web browser open and we all need our main productivity app. On Windows and Linux these days, this just works. You plug-in two monitors. You open two apps. You move between them as you feel like it, and do your work. This is what it looks like:

On the Mac, however, it doesn’t work. The Mac, which was perhaps the first platform to support multiple monitors, certainly the first consumer platform, a two monitor setup looks like this:

Do you see the difference?

Read the rest of this entry »

Posted in Macs, User Interface | 20 Comments »

September 11th, 2009

The following example, taken from an introductory text in object oriented programming, demonstrates a common flaw in object oriented design. Can you spot it?

public class Rectangle {

private double width;

private double height;

public void setWidth(double width) {

this.width = width;

}

public void setHeight(double height) {

this.height = height;

}

public double getHeight() {

return this.height;

}

public double getWidth() {

return this.width;

}

public double getPerimeter() {

return 2*width + 2*height;

}

public double getArea() {

return width * height;

}

}

public class Square extends Rectangle {

public void setSide(double size) {

setWidth(size);

setHeight(size);

}

}

(I’ve changed the language and rewritten the code to protect the guilty.)

Read the rest of this entry »

Posted in Programming | 49 Comments »